A systematic review is a scholarly synthesis of the evidence on a clearly presented topic using critical methods to identify, define and assess research on the topic.[1] A systematic review extracts and interprets data from published studies on the topic (in the scientific literature), then analyzes, describes, critically appraises and summarizes interpretations into a refined evidence-based conclusion.[1][2] For example, a systematic review of randomized controlled trials is a way of summarizing and implementing evidence-based medicine.[3]

While a systematic review may be applied in the biomedical or health care context, it may also be used where an assessment of a precisely defined subject can advance understanding in a field of research.[4] A systematic review may examine clinical tests, public health interventions, environmental interventions,[5] social interventions, adverse effects, qualitative evidence syntheses, methodological reviews, policy reviews, and economic evaluations.[6][7]

Systematic reviews are closely related to meta-analyses, and often the same instance will combine both (being published with a subtitle of "a systematic review and meta-analysis"). The distinction between the two is that a meta-analysis uses statistical methods to induce a single number from the pooled data set (such as an effect size), whereas the strict definition of a systematic review excludes that step. However, in practice, when one is mentioned the other may often be involved, as it takes a systematic review to assemble the information that a meta-analysis analyzes, and people sometimes refer to an instance as a systematic review even if it includes the meta-analytical component.

An understanding of systematic reviews and how to implement them in practice is common for professionals in health care, public health, and public policy.[1]

Systematic reviews contrast with a type of review often called a narrative review. Systematic reviews and narrative reviews both review the literature (the scientific literature), but the term literature review without further specification refers to a narrative review.

Characteristics

A systematic review can be designed to provide a thorough summary of current literature relevant to a research question.[1] A systematic review uses a rigorous and transparent approach for research synthesis, with the aim of assessing and, where possible, minimizing bias in the findings. While many systematic reviews are based on an explicit quantitative meta-analysis of available data, there are also qualitative reviews and other types of mixed-methods reviews which adhere to standards for gathering, analyzing and reporting evidence.[8]

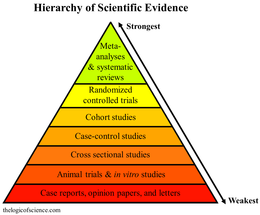

Systematic reviews of quantitative data or mixed-method reviews sometimes use statistical techniques (meta-analysis) to combine results of eligible studies. Scoring levels are sometimes used to rate the quality of the evidence depending on the methodology used, although this is discouraged by the Cochrane Library.[9] As evidence rating can be subjective, multiple people may be consulted to resolve any scoring differences between how evidence is rated.[10][11][12]

The EPPI-Centre, Cochrane, and the Joanna Briggs Institute have been influential in developing methods for combining both qualitative and quantitative research in systematic reviews.[13][14][15] Several reporting guidelines exist to standardise reporting about how systematic reviews are conducted. Such reporting guidelines are not quality assessment or appraisal tools. The Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) statement[16] suggests a standardized way to ensure a transparent and complete reporting of systematic reviews, and is now required for this kind of research by more than 170 medical journals worldwide.[17] Several specialized PRISMA guideline extensions have been developed to support particular types of studies or aspects of the review process, including PRISMA-P for review protocols and PRISMA-ScR for scoping reviews.[17] A list of PRISMA guideline extensions is hosted by the EQUATOR (Enhancing the QUAlity and Transparency Of health Research) Network.[18] However, the PRISMA guidelines have been found to be limited to intervention research and the guidelines have to be changed in order to fit non-intervention research. As a result, Non-Interventional, Reproducible, and Open (NIRO) Systematic Reviews was created to counter this limitation.[19]

For qualitative reviews, reporting guidelines include ENTREQ (Enhancing transparency in reporting the synthesis of qualitative research) for qualitative evidence syntheses; RAMESES (Realist And MEta-narrative Evidence Syntheses: Evolving Standards) for meta-narrative and realist reviews;[20][21] and eMERGe (Improving reporting of Meta-Ethnography) for meta-ethnograph.[13]

Developments in systematic reviews during the 21st century included realist reviews and the meta-narrative approach, both of which addressed problems of variation in methods and heterogeneity existing on some subjects.[22][23]

Types

There are over 30 types of systematic review and Table 1 below non-exhaustingly summarises some of these.[17][16] There is not always consensus on the boundaries and distinctions between the approaches described below.

| Review type | Summary |

|---|---|

| Mapping review/systematic map | A mapping review maps existing literature and categorizes data. The method characterizes quantity and quality of literature, including by study design and other features. Mapping reviews can be used to identify the need for primary or secondary research.[17] |

| Meta-analysis | A meta-analysis is a statistical analysis that combines the results of multiple quantitative studies. Using statistical methods, results are combined to provide evidence from multiple studies. The two types of data generally used for meta-analysis in health research are individual participant data and aggregate data (such as odds ratios or relative risks). |

| Mixed studies review/mixed methods review | Refers to any combination of methods where one significant stage is a literature review (often systematic). It can also refer to a combination of review approaches such as combining quantitative with qualitative research.[17] |

| Qualitative systematic review/qualitative evidence synthesis | This method for integrates or compares findings from qualitative studies. The method can include 'coding' the data and looking for 'themes' or 'constructs' across studies. Multiple authors may improve the 'validity' of the data by potentially reducing individual bias.[17] |

| Rapid review | An assessment of what is already known about a policy or practice issue, which uses systematic review methods to search for and critically appraise existing research. Rapid reviews are still a systematic review, however parts of the process may be simplified or omitted in order to increase rapidity.[24] Rapid reviews were used during the COVID-19 pandemic.[25] |

| Systematic review | A systematic search for data, using a repeatable method. It includes appraising the data (for example the quality of the data) and a synthesis of research data. |

| Systematic search and review | Combines methods from a 'critical review' with a comprehensive search process. This review type is usually used to address broad questions to produce the most appropriate evidence synthesis. This method may or may not include quality assessment of data sources.[17] |

| Systematized review | Include elements of systematic review process, but searching is often not as comprehensive as a systematic review and may not include quality assessments of data sources. |

Scoping reviews

Scoping reviews are distinct from systematic reviews in several ways. A scoping review is an attempt to search for concepts by mapping the language and data which surrounds those concepts and adjusting the search method iteratively to synthesize evidence and assess the scope of an area of inquiry.[22][23] This can mean that the concept search and method (including data extraction, organisation and analysis) are refined throughout the process, sometimes requiring deviations from any protocol or original research plan.[26][27] A scoping review may often be a preliminary stage before a systematic review, which 'scopes' out an area of inquiry and maps the language and key concepts to determine if a systematic review is possible or appropriate, or to lay the groundwork for a full systematic review. The goal can be to assess how much data or evidence is available regarding a certain area of interest.[26][28] This process is further complicated if it is mapping concepts across multiple languages or cultures.

As a scoping review should be systematically conducted and reported (with a transparent and repeatable method), some academic publishers categorize them as a kind of 'systematic review', which may cause confusion. Scoping reviews are helpful when it is not possible to carry out a systematic synthesis of research findings, for example, when there are no published clinical trials in the area of inquiry. Scoping reviews are helpful when determining if it is possible or appropriate to carry out a systematic review, and are a useful method when an area of inquiry is very broad,[29] for example, exploring how the public are involved in all stages systematic reviews.[30]

There is still a lack of clarity when defining the exact method of a scoping review as it is both an iterative process and is still relatively new.[31] There have been several attempts to improve the standardisation of the method,[27][26][28][32] for example via a Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guideline extension for scoping reviews (PRISMA-ScR).[33] PROSPERO (the International Prospective Register of Systematic Reviews) does not permit the submission of protocols of scoping reviews,[34] although some journals will publish protocols for scoping reviews.[30]

Stages

While there are multiple kinds of systematic review methods, the main stages of a review can be summarised as follows:

Defining the research question

Some reported that the 'best practices' involve 'defining an answerable question' and publishing the protocol of the review before initiating it to reduce the risk of unplanned research duplication and to enable transparency and consistency between methodology and protocol.[35][36] Clinical reviews of quantitative data are often structured using the mnemonic PICO, which stands for 'Population or Problem', 'Intervention or Exposure', 'Comparison', and 'Outcome', with other variations existing for other kinds of research. For qualitative reviews, PICo is 'Population or Problem', 'Interest', and 'Context'.

Searching for sources

Relevant criteria can include selecting research that is of good quality and answers the defined question.[35] The search strategy should be designed to retrieve literature that matches the protocol's specified inclusion and exclusion criteria. The methodology section of a systematic review should list all of the databases and citation indices that were searched. The titles and abstracts of identified articles can be checked against predetermined criteria for eligibility and relevance. Each included study may be assigned an objective assessment of methodological quality, preferably by using methods conforming to the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) statement,[18] or the standards of Cochrane.[37]

Common information sources used in searches include scholarly databases of peer-reviewed articles such as MEDLINE, Web of Science, Embase, and PubMed, as well as sources of unpublished literature such as clinical trial registries and grey literature collections. Key references can also be yielded through additional methods such as citation searching, reference list checking (related to a search method called 'pearl growing'), manually searching information sources not indexed in the major electronic databases (sometimes called 'hand-searching'),[38] and directly contacting experts in the field.[39]

To be systematic, searchers must use a combination of search skills and tools such as database subject headings, keyword searching, Boolean operators, and proximity searching, while attempting to balance sensitivity (systematicity) and precision (accuracy). Inviting and involving an experienced information professional or librarian can improve the quality of systematic review search strategies and reporting.[40][41][42][43][44]

'Extraction' of relevant data

Relevant data are 'extracted' from the data sources according to the review method. The data extraction method is specific to the kind of data, and data extracted on 'outcomes' is only relevant to certain types of reviews. For example, a systematic review of clinical trials might extract data about how the research was done (often called the method or 'intervention'), who participated in the research (including how many people), how it was paid for (for example, funding sources) and what happened (the outcomes).[35] Relevant data are being extracted and 'combined' in an intervention effect review, where a meta-analysis is possible.[45]

Assess the eligibility of the data

This stage involves assessing the eligibility of data for inclusion in the review, by judging it against criteria identified at the first stage.[35] This can include assessing if a data source meets the eligibility criteria, and recording why decisions about inclusion or exclusion in the review were made. Software can be used to support the selection process, including text mining tools and machine learning, which can automate aspects of the process.[46] The 'Systematic Review Toolbox' is a community driven, web-based catalogue of tools, to help reviewers chose appropriate tools for reviews.[47]

Analyse and combine the data

Analysing and combining data can provide an overall result from all the data. Because this combined result may use qualitative or quantitative data from all eligible sources of data, it is considered more reliable as it provides better evidence, as the more data included in reviews, the more confident we can be of conclusions. When appropriate, some systematic reviews include a meta-analysis, which uses statistical methods to combine data from multiple sources. A review might use quantitative data, or might employ a qualitative meta-synthesis, which synthesises data from qualitative studies. A review may also bring together the findings from quantitative and qualitative studies in a mixed methods or overarching synthesis.[48] The combination of data from a meta-analysis can sometimes be visualised. One method uses a forest plot (also called a blobbogram).[35] In an intervention effect review, the diamond in the 'forest plot' represents the combined results of all the data included.[35] An example of a 'forest plot' is the Cochrane Collaboration logo.[35] The logo is a forest plot of one of the first reviews which showed that corticosteroids given to women who are about to give birth prematurely can save the life of the newborn child.[49]

Recent visualisation innovations include the albatross plot, which plots p-values against sample sizes, with approximate effect-size contours superimposed to facilitate analysis.[50] The contours can be used to infer effect sizes from studies that have been analysed and reported in diverse ways. Such visualisations may have advantages over other types when reviewing complex interventions.

Communication and dissemination

Once these stages are complete, the review may be published, disseminated, and translated into practice after being adopted as evidence. The UK National Institute for Health Research (NIHR) defines dissemination as "getting the findings of research to the people who can make use of them to maximise the benefit of the research without delay".[51]

Some users do not have time to invest in reading large and complex documents and/or may lack awareness or be unable to access newly published research. Researchers are therefore developing skills to use creative communication methods such as illustrations, blogs, infographics and board games to share the findings of systematic reviews.[52]

Automation

Living systematic reviews are a newer kind of semi-automated, up-to-date online summaries of research that are updated as new research becomes available.[53] The difference between a living systematic review and a conventional systematic review is the publication format. Living systematic reviews are "dynamic, persistent, online-only evidence summaries, which are updated rapidly and frequently".[54]

The automation or semi-automation of the systematic process itself is increasingly being explored. While little evidence exists to demonstrate it is as accurate or involves less manual effort, efforts that promote training and using artificial intelligence for the process are increasing.[55][53]

Research fields

Health and medicine

Current use of systematic reviews in medicine

Many organisations around the world use systematic reviews, with the methodology depending on the guidelines being followed. Organisations which use systematic reviews in medicine and human health include the National Institute for Health and Care Excellence (NICE, UK), the Agency for Healthcare Research and Quality (AHRQ, US), and the World Health Organization. Most notable among international organisations is Cochrane, a group of over 37,000 specialists in healthcare who systematically review randomised trials of the effects of prevention, treatments, and rehabilitation as well as health systems interventions. They sometimes also include the results of other types of research. Cochrane Reviews are published in The Cochrane Database of Systematic Reviews section of the Cochrane Library. The 2015 impact factor for The Cochrane Database of Systematic Reviews was 6.103, and it was ranked 12th in the Medicine, General & Internal category.[56]

There are several types of systematic reviews, including:[57][58][59][60]

- Intervention reviews assess the benefits and harms of interventions used in healthcare and health policy.

- Diagnostic test accuracy reviews assess how well a diagnostic test performs in diagnosing and detecting a particular disease. For conducting diagnostic test accuracy reviews, free software such as MetaDTA and CAST-HSROC in the graphical user interface is available.[61][62]

- Methodology reviews address issues relevant to how systematic reviews and clinical trials are conducted and reported.

- Qualitative reviews synthesize qualitative evidence to address questions on aspects other than effectiveness.

- Prognosis reviews address the probable course or future outcome(s) of people with a health problem.

- Overviews of Systematic Reviews (OoRs) compile multiple pieces of evidence from systematic reviews into a single accessible document, sometimes referred to as umbrella reviews.

- Living systematic reviews are continually updated, incorporating relevant new evidence as it becomes available.[63]

- Rapid reviews are a form of knowledge synthesis that "accelerates the process of conducting a traditional systematic review through streamlining or omitting specific methods to produce evidence for stakeholders in a resource-efficient manner".[64]

- Reviews of complex health interventions in complex systems are to improve evidence synthesis and guideline development.[65]

Patient and public involvement in systematic reviews

There are various ways patients and the public can be involved in producing systematic reviews and other outputs. Tasks for public members can be organised as 'entry level' or higher. Tasks include:

- Joining a collaborative volunteer effort to help categorise and summarise healthcare evidence[66]

- Data extraction and risk of bias assessment

- Translation of reviews into other languages

A systematic review of how people were involved in systematic reviews aimed to document the evidence-base relating to stakeholder involvement in systematic reviews and to use this evidence to describe how stakeholders have been involved in systematic reviews.[67] Thirty percent involved patients and/or carers. The ACTIVE framework provides a way to describe how people are involved in systematic review and may be used as a way to support systematic review authors in planning people's involvement.[68] Standardised Data on Initiatives (STARDIT) is another proposed way of reporting who has been involved in which tasks during research, including systematic reviews.[69][70]

There has been some criticism of how Cochrane prioritises systematic reviews.[71] Cochrane has a project that involved people in helping identify research priorities to inform Cochrane Reviews.[72][73] In 2014, the Cochrane–Wikipedia partnership was formalised.[74]

Environmental health and toxicology

Systematic reviews are a relatively recent innovation in the field of environmental health and toxicology. Although mooted in the mid-2000s, the first full frameworks for conduct of systematic reviews of environmental health evidence were published in 2014 by the US National Toxicology Program's Office of Health Assessment and Translation[75] and the Navigation Guide at the University of California San Francisco's Program on Reproductive Health and the Environment.[76] Uptake has since been rapid, with the estimated number of systematic reviews in the field doubling since 2016 and the first consensus recommendations on best practice, as a precursor to a more general standard, being published in 2020.[77]

Social, behavioural, and educational

In 1959, social scientist and social work educator Barbara Wootton published one of the first contemporary systematic reviews of literature on anti-social behavior as part of her work, Social Science and Social Pathology.[78][79]

Several organisations use systematic reviews in social, behavioural, and educational areas of evidence-based policy, including the National Institute for Health and Care Excellence (NICE, UK), Social Care Institute for Excellence (SCIE, UK), the Agency for Healthcare Research and Quality (AHRQ, US), the World Health Organization, the International Initiative for Impact Evaluation (3ie), the Joanna Briggs Institute, and the Campbell Collaboration. The quasi-standard for systematic review in the social sciences is based on the procedures proposed by the Campbell Collaboration, which is one of several groups promoting evidence-based policy in the social sciences.[80]

Others

Some attempts to transfer the procedures from medicine to business research have been made,[81] including a step-by-step approach,[82][83] and developing a standard procedure for conducting systematic literature reviews in business and economics.

Systematic reviews are increasingly prevalent in other fields, such as international development research.[84] Subsequently, several donors (including the UK Department for International Development (DFID) and AusAid) are focusing more on testing the appropriateness of systematic reviews in assessing the impacts of development and humanitarian interventions.[84]

The Collaboration for Environmental Evidence (CEE) has a journal titled Environmental Evidence, which publishes systematic reviews, review protocols, and systematic maps on the impacts of human activity and the effectiveness of management interventions.[85]

Review tools

A 2022 publication identified 24 systematic review tools and ranked them by inclusion of 30 features deemed most important when performing a systematic review in accordance with best practices. The top six software tools (with at least 21/30 key features) are all proprietary paid platforms, typically web-based, and include:[86]

- Giotto Compliance

- DistillerSR

- Nested Knowledge

- EPPI-Reviewer Web

- LitStream

- JBI SUMARI

The Cochrane Collaboration provides a handbook for systematic reviewers of interventions which "provides guidance to authors for the preparation of Cochrane Intervention reviews."[37] The Cochrane Handbook also outlines steps for preparing a systematic review[37] and forms the basis of two sets of standards for the conduct and reporting of Cochrane Intervention Reviews (MECIR; Methodological Expectations of Cochrane Intervention Reviews).[87] It also contains guidance on integrating patient-reported outcomes into reviews.

Limitations

Out-dated or risk of bias

While systematic reviews are regarded as the strongest form of evidence, a 2003 review of 300 studies found that not all systematic reviews were equally reliable, and that their reporting can be improved by a universally agreed upon set of standards and guidelines.[88] A further study by the same group found that of 100 systematic reviews monitored, 7% needed updating at the time of publication, another 4% within a year, and another 11% within 2 years; this figure was higher in rapidly changing fields of medicine, especially cardiovascular medicine.[89] A 2003 study suggested that extending searches beyond major databases, perhaps into grey literature, would increase the effectiveness of reviews.[90]

Some authors have highlighted problems with systematic reviews, particularly those conducted by Cochrane, noting that published reviews are often biased, out of date, and excessively long.[91] Cochrane reviews have been criticized as not being sufficiently critical in the selection of trials and including too many of low quality. They proposed several solutions, including limiting studies in meta-analyses and reviews to registered clinical trials, requiring that original data be made available for statistical checking, paying greater attention to sample size estimates, and eliminating dependence on only published data. Some of these difficulties were noted as early as 1994:

much poor research arises because researchers feel compelled for career reasons to carry out research that they are ill-equipped to perform, and nobody stops them.

— Altman DG, 1994[92]

Methodological limitations of meta-analysis have also been noted.[93] Another concern is that the methods used to conduct a systematic review are sometimes changed once researchers see the available trials they are going to include.[94] Some websites have described retractions of systematic reviews and published reports of studies included in published systematic reviews.[95][96][97] Eligibility criteria that is arbitrary may affect the perceived quality of the review.[98][99]

Limited reporting of data from human studies

The AllTrials campaign report that around half of clinical trials have never reported results and works to improve reporting.[100] 'Positive' trials were twice as likely to be published as those with 'negative' results.[101]

As of 2016, it is legal for-profit companies to conduct clinical trials and not publish the results.[102] For example, in the past 10 years, 8.7 million patients have taken part in trials that have not published results.[102] These factors mean that it is likely there is a significant publication bias, with only 'positive' or perceived favourable results being published. A recent systematic review of industry sponsorship and research outcomes concluded that "sponsorship of drug and device studies by the manufacturing company leads to more favorable efficacy results and conclusions than sponsorship by other sources" and that the existence of an industry bias that cannot be explained by standard 'risk of bias' assessments.[103]

Poor compliance with review reporting guidelines

The rapid growth of systematic reviews in recent years has been accompanied by the attendant issue of poor compliance with guidelines, particularly in areas such as declaration of registered study protocols, funding source declaration, risk of bias data, issues resulting from data abstraction, and description of clear study objectives.[104][105][106][107][108] A host of studies have identified weaknesses in the rigour and reproducibility of search strategies in systematic reviews.[109][110][111][112][113][114] To remedy this issue, a new PRISMA guideline extension called PRISMA-S is being developed.[115] Furthermore, tools and checklists for peer-reviewing search strategies have been created, such as the Peer Review of Electronic Search Strategies (PRESS) guidelines.[116]

A key challenge for using systematic reviews in clinical practice and healthcare policy is assessing the quality of a given review. Consequently, a range of appraisal tools to evaluate systematic reviews have been designed. The two most popular measurement instruments and scoring tools for systematic review quality assessment are AMSTAR 2 (a measurement tool to assess the methodological quality of systematic reviews)[117][118][119] and ROBIS (Risk Of Bias In Systematic reviews); however, these are not appropriate for all systematic review types.[120]

History

The first publication that is now recognized as equivalent to a modern systematic review was a 1753 paper by James Lind, which reviewed all of the previous publications about scurvy.[121] Systematic reviews appeared only sporadically until the 1980s, and became common after 2000.[121] More than 10,000 systematic reviews are published each year.[121]

History in medicine

A 1904 British Medical Journal paper by Karl Pearson collated data from several studies in the UK, India and South Africa of typhoid inoculation. He used a meta-analytic approach to aggregate the outcomes of multiple clinical studies.[122] In 1972, Archie Cochrane wrote: "It is surely a great criticism of our profession that we have not organised a critical summary, by specialty or subspecialty, adapted periodically, of all relevant randomised controlled trials".[123] Critical appraisal and synthesis of research findings in a systematic way emerged in 1975 under the term 'meta analysis'.[124][125] Early syntheses were conducted in broad areas of public policy and social interventions, with systematic research synthesis applied to medicine and health.[126] Inspired by his own personal experiences as a senior medical officer in prisoner of war camps, Archie Cochrane worked to improve the scientific method in medical evidence.[127] His call for the increased use of randomised controlled trials and systematic reviews led to the creation of The Cochrane Collaboration,[128] which was founded in 1993 and named after him, building on the work by Iain Chalmers and colleagues in the area of pregnancy and childbirth.[129][123]

See also

References

This article was submitted to WikiJournal of Medicine for external academic peer review in 2019 (reviewer reports). The updated content was reintegrated into the Wikipedia page under a CC-BY-SA-3.0 license (2020). The version of record as reviewed is: Jack Nunn; Steven Chang; et al. (9 November 2020). "What are Systematic Reviews?" (PDF). WikiJournal of Medicine. 7 (1): 5. doi:10.15347/WJM/2020.005. ISSN 2002-4436. Wikidata Q99440266.

This article was submitted to WikiJournal of Medicine for external academic peer review in 2019 (reviewer reports). The updated content was reintegrated into the Wikipedia page under a CC-BY-SA-3.0 license (2020). The version of record as reviewed is: Jack Nunn; Steven Chang; et al. (9 November 2020). "What are Systematic Reviews?" (PDF). WikiJournal of Medicine. 7 (1): 5. doi:10.15347/WJM/2020.005. ISSN 2002-4436. Wikidata Q99440266.{{cite journal}}: CS1 maint: unflagged free DOI (link) STARDIT report Q101116128.

External links

- Systematic Review Tools — Search and list of systematic review software tools

- Cochrane Collaboration

- MeSH: Review Literature—articles about the review process

- MeSH: Review [Publication Type] - limit search results to reviews

- Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) Statement Archived 27 July 2011 at the Wayback Machine, "an evidence-based minimum set of items for reporting in systematic reviews and meta-analyses"

- PRISMA Extension for Scoping Reviews (PRISMA-ScR): Checklist and explanation

- Animated Storyboard: What Are Systematic Reviews? - Cochrane Consumers and Communication Group

- Sysrev - a free platform with open access systematic reviews.

- STARDIT - an open access data-sharing system to standardise the way that information about initiatives is reported.